A SQL Server FileTable is a special table that allows storing directory and files into the SQL Server. With this option, you can access these files and directories from windows applications as if they were stored in the file system.

Files stored in the FileTable are exposed to windows through a windows share.

If you create or change a file through the windows share the command is captured by a SQL Server component and the changes are applied to the corresponding data in the FileTable.

Since FileTable feature builds on top of SQL Server FILESTREAM technology, first you need to enable your SQL Server instance for filestream.

To enable FILESTREAM feature on SQL Server 2008:

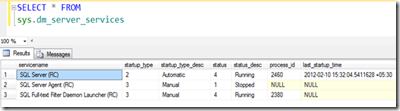

Open SQL Server Configuration Manager (Start > Programs > Microsoft SQL Server 2008 > Configuration Tools > SQL Server Configuration Manager)

Navigate to the SQL Server Services node and select the SQL Server instance you want to modify SQL Server (MSSQLSERVER)

Click the FILESTREAM tab and select the checkboxes to enable FILESTREAM and enter a share name for the files as shown below image.

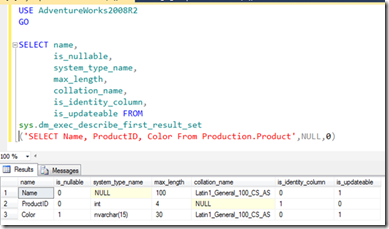

Next is to enable the filestream access level which can be done from either using a T-SQL or from the interface.

EXEC sys.sp_configure N'filestream access level', N'2'

GO

RECONFIGURE WITH OVERRIDE

GO

If you prefer to use user interface you can enable file stream by Right Click Server name from the management studio and select Properties and then select Advanced option and finally you can select the filestream option from the given option.

Let us create a database with filestream set to on.

USE MASTER

GO

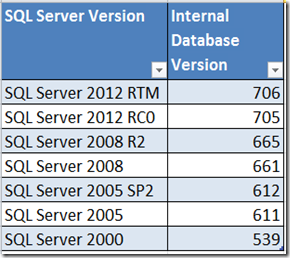

IF DATABASEPROPERTYEX('FileTableDatabase','Version') > 0

DROP DATABASE FileTableDatabase

CREATE DATABASE FileTableDatabase

ON PRIMARY

(

NAME = N'FileTableDatabase_Data',

FILENAME = N'D:\FileTable\FileTableDatabase.mdf'

),

FILEGROUP FilestreamFG CONTAINS FILESTREAM

(

NAME = N'FileTableDatabase_FileStream',

FILENAME= 'D:\FileTable\Data'

)

LOG ON

(

NAME = N'FileTableDatabase_Log',

FILENAME = N'D:\FileTable\FileTableDatabase_log.ldf'

)

WITH FILESTREAM

(

NON_TRANSACTED_ACCESS = FULL,

DIRECTORY_NAME = N'FileTable'

)

Next step is to create a File Table which will be the container for the files and directories.

USE FileTableDatabase

GO

CREATE TABLE FillTableDocument AS FileTable

WITH

(

FileTable_Directory = 'FileTableDocument'

)

You do not have a user interface option to create FileTables hence you need to write T-SQL as shown above.

After creating the FillTable, if you navigate to SQL Server Management Studio and expand FillTables node under the Tables node, you will see the created FileTable. If you expand Columns, you will see list columns.

In the File Table created, you can right click and select Explore FileTables Directory as shown in the below image.

This will take you to the File Directory where you can create your files. Now you have the option of placing files and creating directories in this folder which will automatically see from the File Table.

As you can see from the below image three different types of files are copied to the FileTable Directory and there is another sub folder is created along with few files. These files were copied from the operating system not from the SQL Server.

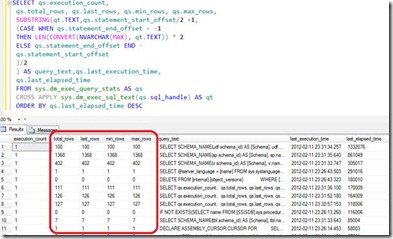

If you query the File table, you will see manually copied files are updated to the SQL Server.

If you want to rename files, you can do it from the OS level as well as from the T-SQL query as shown in the below script. T-SQL script will update table and accordingly file name will be changed and vice-versa. .

UPDATE

[FileTableDatabase].[dbo].[FillTableDocument]

SET name = 'FileTable4.txt'

WHERE [stream_id] = '2EB5608D-F270-E111-80DB-101F74EE0B01'

Since path_locator has data type of heirachyid you can use all the CLR functions.

Following will give you the operating system root path for the given File Table.

SELECT FileTableRootPath('dbo.FillTableDocument') as RootPath

For each file you can get the full file path with the following query.

SELECT name,file_stream.GetFileNamespacePath() full_path ,is_directory

FROM dbo.FillTableDocument c

Which has the following output.

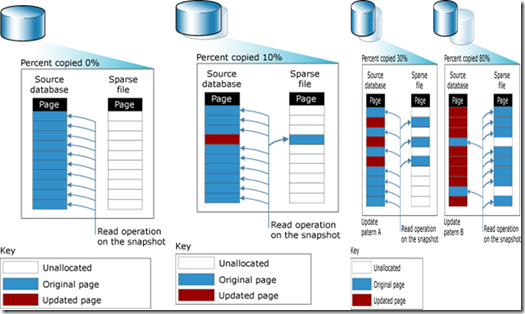

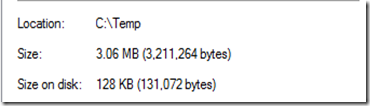

Another important point to note here is, all these files will not be saved in SQL Server database. This means that though you have large files in the operating system, your table sizes or database sizes will not be large in size. When you are querying the table, if you are selecting the file_stream column, for large files it will take little while to run the query since it reads the file stream by reading the entire file.

You can utilize File Table for handle image libraries, video libraries and CV management system.

Triggering a New File

Most of the time, users are required to track new file comes to the folder, For example, you might need to execute a SSIS package once new file is received. If you have File Table option enabled, you can simply create an INSERT trigger to the File table and execute SSIS within a trigger.