Translate

Thursday, December 23, 2021

What3Words - Expressing Your Location in Three Words

Monday, November 22, 2021

Most Popular Software Programming Languages In Pictures

If you are a developer you might be wondering what is the best language for application development. Let us look at it from some pictures.

Thursday, November 18, 2021

Cluster Validation - Purity Calculation

Saturday, November 13, 2021

Hasan Ali & Tweets

Friday, November 5, 2021

Microsoft SQL Server 2022

After three years, Microsoft is gearing up to release its next version of its flagship database product Microsoft SQL Server which is 2022. As for every new release, obvious question us what are the new features.

You can get more details from the following references.

What's new in SQL Server 2022 - YouTube

PASS Data Community Summit November 8-12 2021

SQL Server 2022 integrates with Azure Synapse Link and Azure Purview which will enable its users to drive more insights, predictions, and governance from their data at a higher scale. Cloud integration is enhanced with disaster recovery (DR) to Azure SQL Managed Instance, along with no-ETL (extract, transform, and load) connections to cloud analytics, which allow database administrators to manage their data estates with greater flexibility and minimal impact to the end-user. Performance and scalability are automatically enhanced via built-in query intelligence. There is choice and flexibility across languages and platforms, including Linux, Windows, and Kubernetes.

Thursday, November 4, 2021

Article: Use Replication to improve the ETL process in SQL Server

Sunday, October 24, 2021

Federalist Papers : Case for Naïve Bayes Text Classification

The Federalist Papers is a collection of 85 articles and essays written by Alexander Hamilton, James Madison, and John Jay. 1787 after the UK was thrown out from US, many were in the view that 13 counties should rule independently.

John Jay, James Madison, Alexander Hamilton wrote letters independently to pursue that the US should have a strong central government with the individual state government. Between 1787 - 1788 these papers were published under the pseudonym PUBLIS. While the authorship of 73 of The Federalist essays is fairly certain, the identities of those who wrote the twelve remaining essays are disputed by some scholars. In 1963 this dispute was fixed by Mosteller and Wallace using Bayesian Methods.

Let us see do a simple analysis of these papers by performing a analyse of the titles of these papers using the Orange Data Mining Tool. You can retrieve the sample files and the Orange workflow from dineshasanka/FederalistPapersOrangeDataMining (github.com).

Following is the Orange Data Mining workflow and let us go through important controls.

Saturday, October 23, 2021

Monitor Your System Metrics With InfluxDB Cloud in Under One Minute

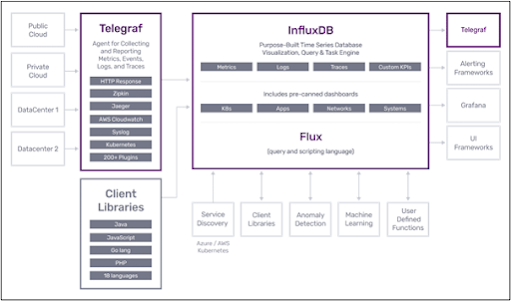

InfluxDB is a Time Series stack that will provide end-to-end features to capture, store and present time series data.

Following is the InfluxDB 2.0 stack that covers all aspects of time series data.

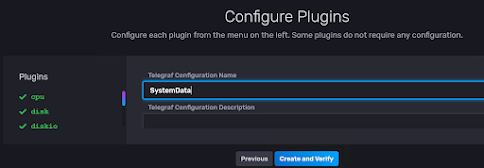

Let us see how we can use InfluxDB 2.0 to monitor the operating system.

First, you need to create a account to FluxDB Could.

Next is to create a bucket to store the data.

Now you need to set the environment variable and need to execute Telegraf by using the following commands.

Thursday, October 21, 2021

Resumable Index Rebuilding in SQL Server 2017

CREATE TABLE SampleData (ID INT IDENTITY(1,1) PRIMARY KEY CLUSTERED,

Name CHAR(1000),

AddressI CHAR(100)

)

INSERT INTO SampleData

(Name, AddressI)

VALUES

('DINESH','AddressI')

GO 2000000

ALTER INDEX [PK__SampleD] ON [dbo].[SampleData]REBUILD WITH (ONLINE = ON, RESUMABLE = ON);

Now you can PAUSE and RESUME the index.

ALTER INDEX [PK__SampleD] ON [SampleData] PAUSE

ALTER INDEX [PK__SampleD] ON [SampleData] RESUME

Wednesday, October 20, 2021

Simple Rule Based Text Classification using Orange Data Mining

Wednesday, October 6, 2021

Time Series CheatSheet - v 9.0

This time we will have few more updates to the Time Series cheat sheet that can be seen from the following image. Image size was changed as we are covering few more components and you can get to the original file from Time-Series-Cheat-Sheet

Monday, October 4, 2021

Orange Data Mining - Text Processing

Saturday, October 2, 2021

Article: Text Classification in Azure Machine Learning using Word Vector

WEKA or Waikato Environment for Knowledge Analysis developed at the University of Waikato, New Zealand, is a good tool to perform text Information Retrieval as it has a lot of features like Term Frequency (TF). Inverse Document Frequency (IDF), NGram Tokenization, Stopwords, Stemming, Document Length.

This latest article Text Classification in Azure Machine Learning using Word Vectors describes how the output of word vectors in weka can be used in Azure Machine learning in order to process better classification.

Following is the table of content for the article series on Azure Machine Learning.

Thursday, September 23, 2021

Recovering Deleted Data in SQL Server Databases

How many times you have come across unexpected data deletion in the production environment as looking for data costly tools, to recover your data. If you cannot recover your data, there can be situations where you will be thrown out of the business.

How do you plan for these accidental or deliberate data deletions? Point in Time Recovery with SQL Server is the option that allows you to recover the deleted data. However, you need to better understanding SQL Server Recovery Models and Transaction Log Use in order to enable Point in Time Recovery.

This is an important configuration that needs to be done and no point complaining later.

Tuesday, September 21, 2021

Article : Building Ensemble Classifiers in Azure Machine Learning

A new article of the series, Building Ensemble Classifiers in Azure Machine Learning that discusses how to combine multiple classifiers.

In ensemble Classifiers, we will look at how to perform predictions using multiple classification techniques so that it can produce better models with higher accuracy or they can avoid overfitting. This is equivalent to a patient that is referring multiple specialist doctors to diagnosis a disease rather than relies on one doctor.

Thursday, September 16, 2021

Grouping the Flags - Image Processing using Orange

If you look at different flags of countries, you would think that some flags look similar. This post is to explain how Orange Data Mining Tool can be used in order to cluster images into groups. You can get the dataset and Orage Package in ImageProcessing-Orange (github.com).

This is a simple Data Mining Package, this will show that how easily you can perform image processing in Orange.