Writing is my passion. Writing has opened me many avenues over the years. Thought of combining all the data warehouse related article into a one post in different areas in data warehousing.

DESIGN

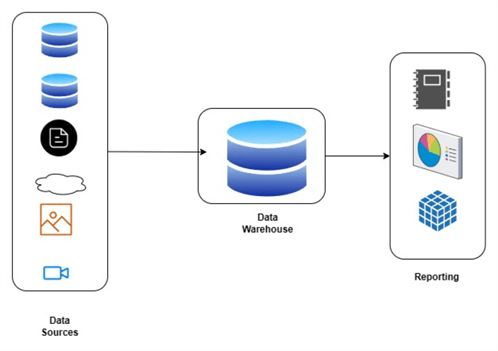

What

is a Data Warehouse? (mssqltips.com)

Things

you

should avoid when designing a Data Warehouse (sqlshack.com)

Infrastructure

Planning for a SQL Server Data Warehouse (mssqltips.com)

Why

Surrogate Keys are Needed for a SQL Server Data Warehouse (mssqltips.com)

Create

an Extended Date Dimension for a SQL Server Data Warehouse (mssqltips.com)

SQL

Server Temporal Tables Overview (mssqltips.com)

Data Warehousing Best Practices for SQL Server (mssqltips.com)

• Testing

Type 2 Slowly Changing Dimensions in a Data Warehouse (sqlshack.com)

• Implementing

Slowly Changing Dimensions (SCDs) in Data Warehouses (sqlshack.com)

• Incremental

Data

Extraction for ETL using Database Snapshots (sqlshack.com)

• Use

Replication to improve the ETL process in SQL Server (sqlshack.com)

• Using

the SSIS Script Component as a Data Source (sqlshack.com)

• Fuzzy

Lookup Transformations in SSIS (sqlshack.com)

• SSIS

Conditional Split overview (sqlshack.com)

• Loading

Historical Data into a SQL Server Data Warehouse (mssqltips.com)

• Retry

SSIS Control Flow Tasks (mssqltips.com)

• SSIS

CDC Tasks for Incremental Data Loading (mssqltips.com)

• Multi-language

support for SSAS (sqlshack.com)

• Enhancing

Data Analytics with SSAS Dimension Hierarchies (sqlshack.com)

• Improve

readability with SSAS Perspectives (sqlshack.com)

• SSAS Database

Management (sqlshack.com)

• OLAP Cubes in SQL

Server (sqlshack.com)

• SSAS

Hardware Configuration Recommendations (mssqltips.com)

• Create

KPI in a SSAS Cube (mssqltips.com)

• Monitoring SSAS with Extended Events (mssqltips.com)

SSRS

• Exporting

SSRS reports to multiple worksheets in Excel (sqlshack.com)

• Enhancing

Customer Experiences with Subscriptions in SSRS (sqlshack.com)

• Alternate Row

Colors in SSRS (sqlshack.com)

• Migrate On-Premises SQL Server Business Intelligence Solution to Azure (mssqltips.com)

Other

• Dynamic

Data Masking in SQL Server (sqlshack.com)

• Data

Disaster Recovery with Log Shipping (sqlshack.com)

• Using

the SQL Server Service Broker for Asynchronous Processing (sqlshack.com)

• SQL

Server auditing with Server and Database audit specifications (sqlshack.com)

• Archiving

SQL Server data using Partitions - SQL Shack

• Script

to Create and Update Missing SQL Server Columnstore

Indexes (mssqltips.com)

• SQL

Server Clustered Index Behavior Explained via Execution Plans (mssqltips.com)

• SQL

Server Maintenance Plan Index Rebuild and Reorganize Tasks (mssqltips.com)

• SQL

Server Resource Governor Configuration with T-SQL and SSMS (mssqltips.com)